Databricks

The Databricks Lakehouse Platform provides a unified set of tools for building, deploying, sharing, and maintaining enterprise-grade data solutions at scale. Databricks integrates with cloud storage and security in your cloud account, and manages and deploys cloud infrastructure on your behalf. Toric users can continuously and securely push and pull data from Databricks.

- Read and ingest from Databricks and transform data into interactive insights.

- Clean, process, and enrich raw data into business-ready format and export transformed data back to Databricks.

- Create automations to ingest, run flows, or export data from Databricks on a schedule or trigger event, like a webhook or source update.

- Perform data engineering tasks on ingested data including processing, cleaning, enriching, and transforming data into insights.

Data Access

| API |

|---|

| REST API |

Don't see the endpoints you are looking for? We're always happy to make new endpoints available. Request an endpoint here!

Configuration guide

Setup time: 45 seconds

Requirements:

- Databricks Subscription

- Web Browser (Safari, Chrome, Edge, Firefox)

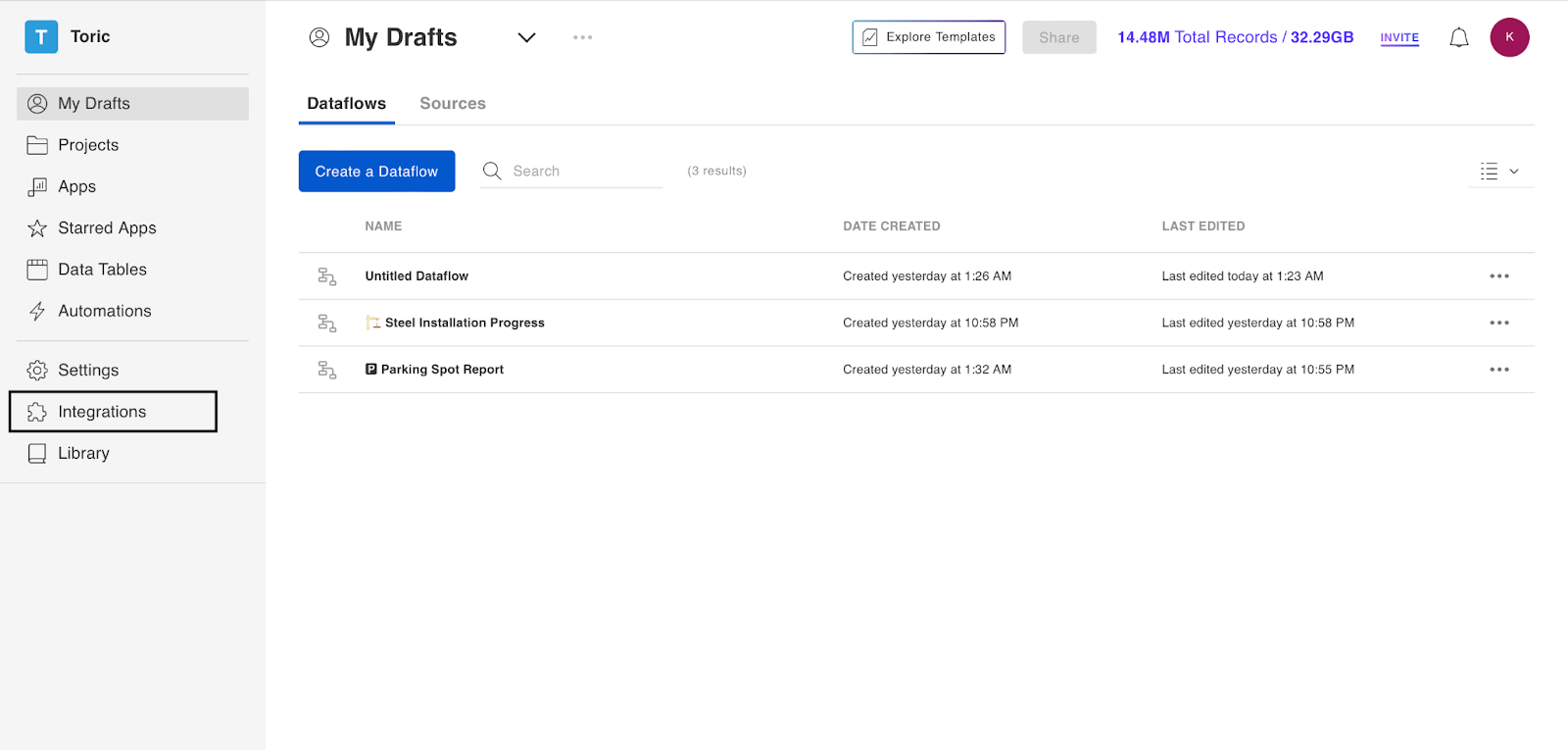

1. Login to Toric and navigate to the Integrations page

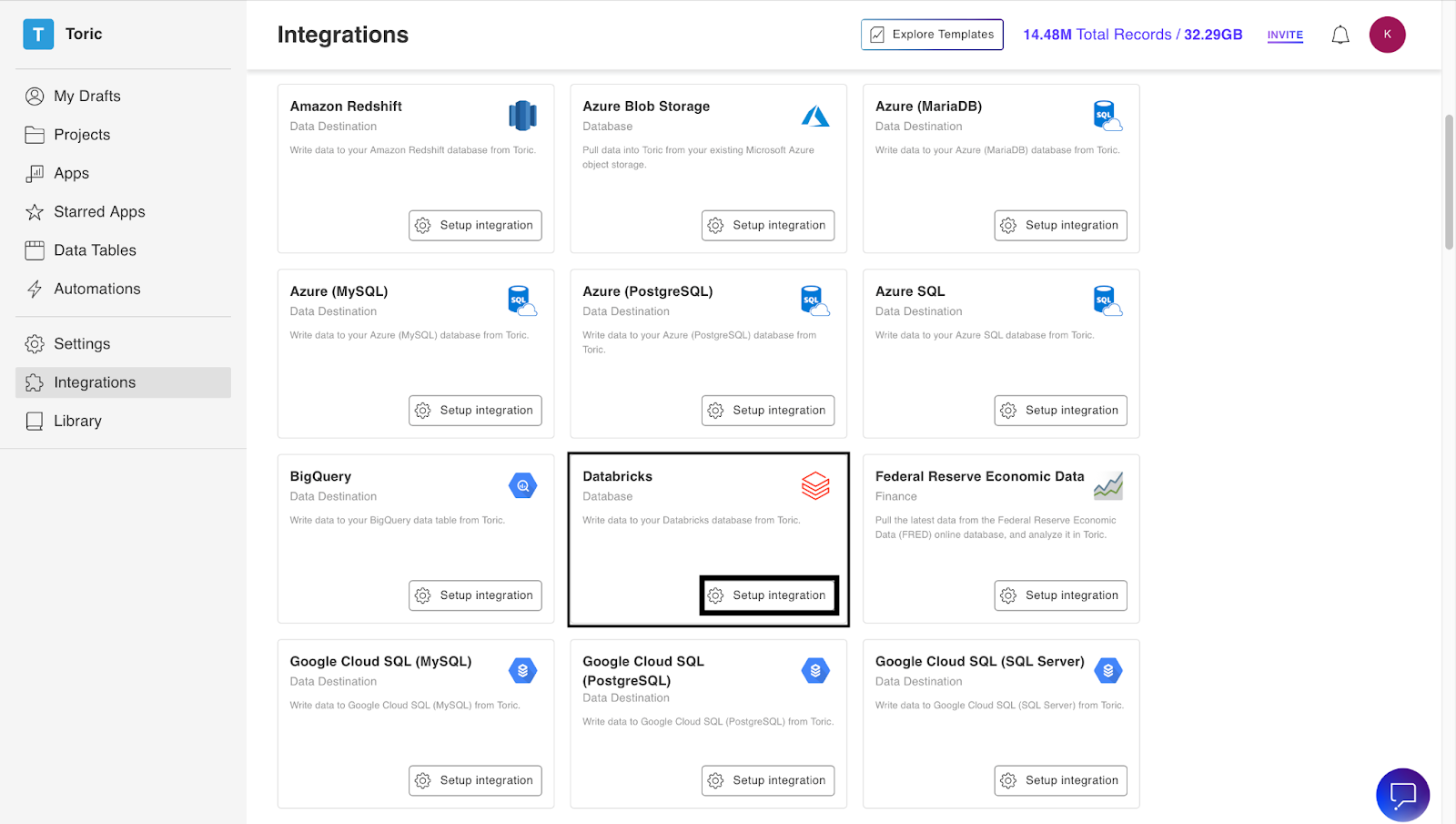

2. Click on “Setup Integration” in the Databricks thumbnail.

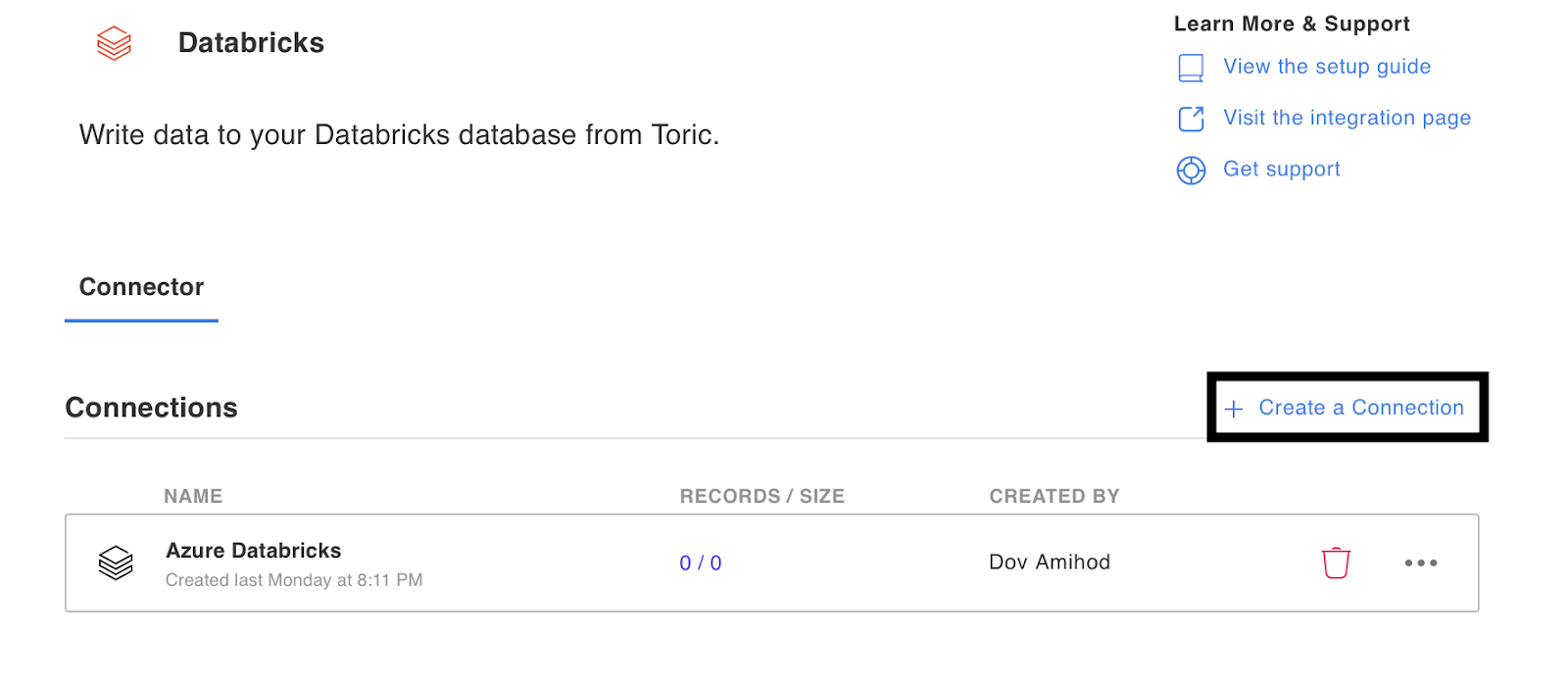

3. Click “ Create a Connection” to set up a new Databricks connection.

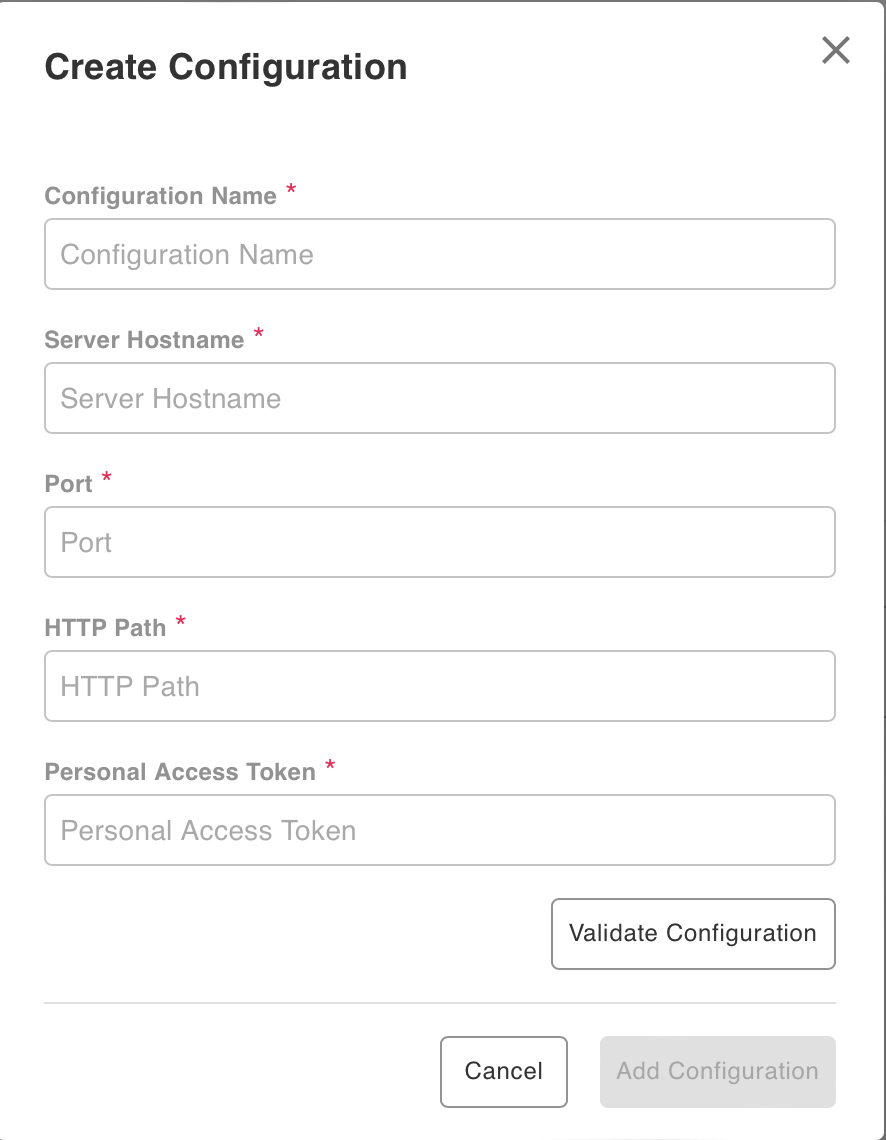

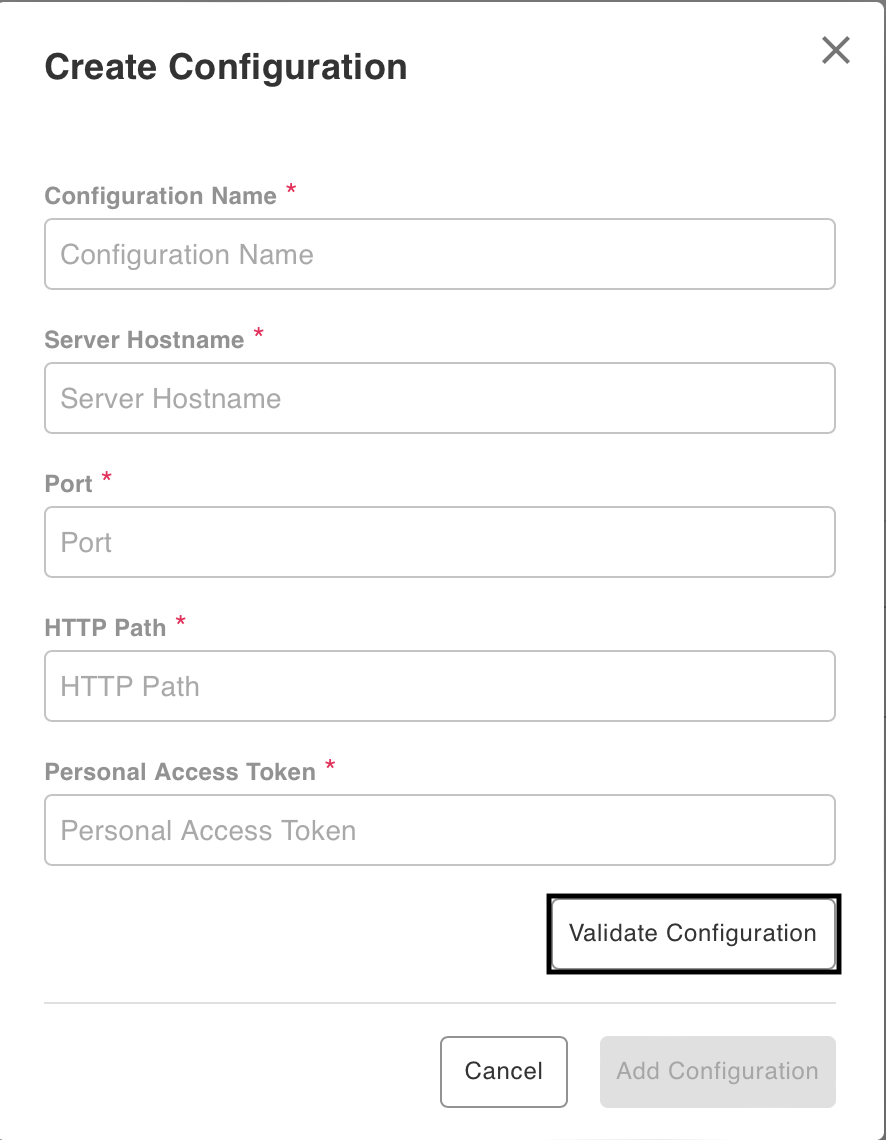

4. Enter the configuration information and credentials required for the new connection. Alternatively, you can reach out to Toric to set-up this connector for you [support@toric.com].

Enter your desired credentials to setup the connection to your Databricks platform. Alternatively, reach out to the Toric team to have them establish this connection for you.

Fill out the following fields to establish the connection:

a. Configuration Name

b. Server Hostname

c. Port

d. HTTP Path

e. Personal Access Token

Locating Your Configuration Details in Databricks

To locate your configuration details in Databricks, follow these steps:

- In your Databricks Workspace, click on "SQL Warehouses" in the left-side menu.

- Select the warehouse you would like to connect to in Toric, and click on it.

- Click on “Connection Details” and you should now

You should find the Server Hostname, Port, and HTTP Path. Now, we are only missing the Personal Access Token.

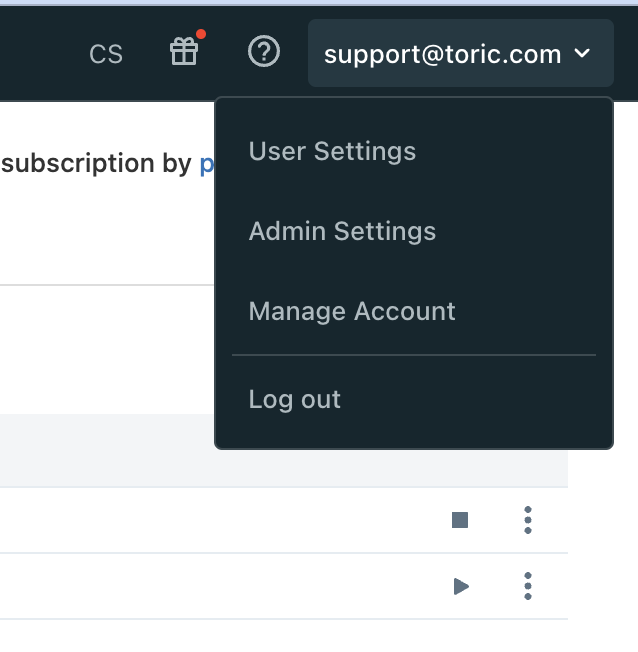

- On the right side upper menu, Click on the arrow down icon

- Click on User Settings

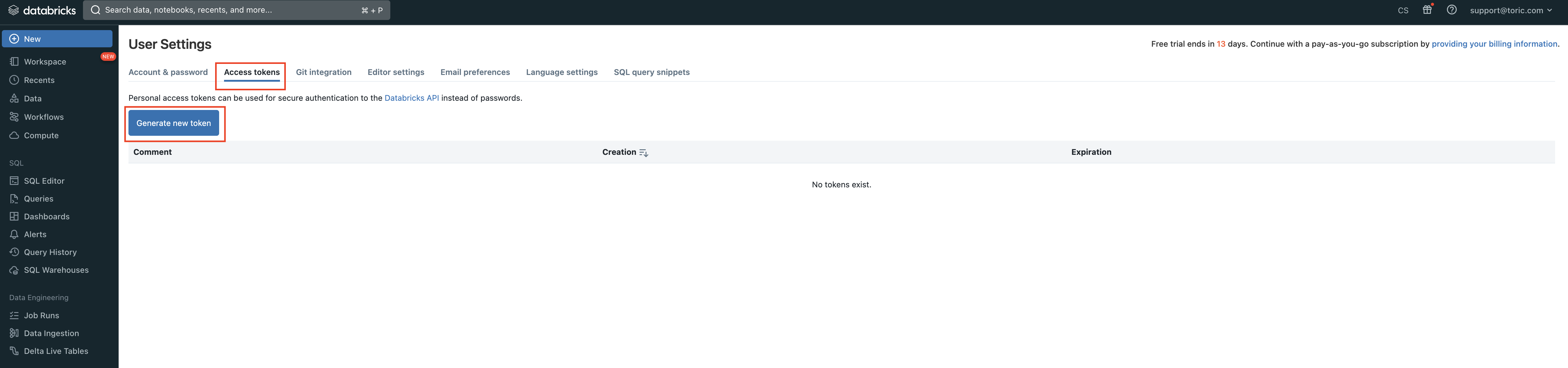

- Click on “Access tokens”

- Click on Generate New Token

- Copy and paste this token to the Toric Configuration window

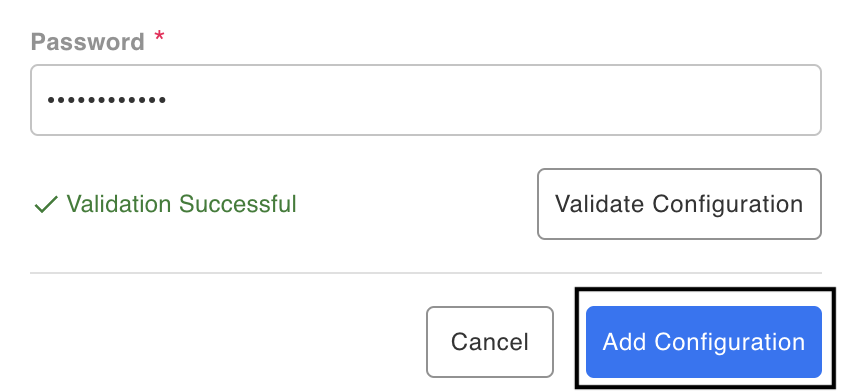

5. Once you’ve completed all fields, click “Validate Configuration.” Note: Invalid fields will be highlighted in red and a successful validation will show in green.

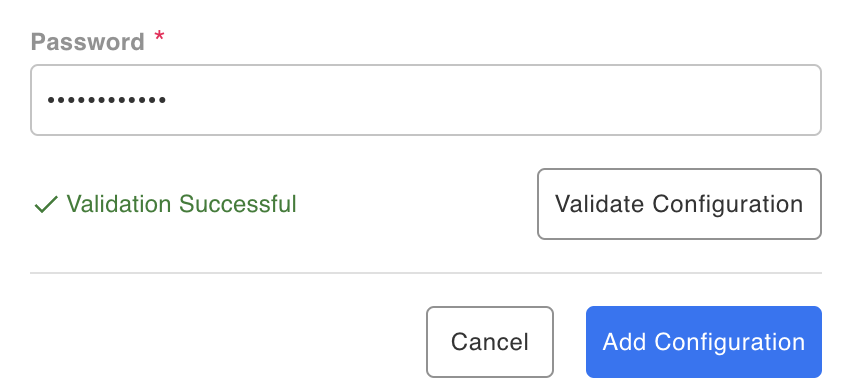

6. If the information entered is valid, a “Validation Successful” message will appear. Note: Invalid fields will be highlighted in red and a successful validation will show in green.

7. Click on “Add Configuration” to finalize your connector.

You have successfully finished setting up your Databricks Integration!

Integration Capabilities Supported by the Connector

Toric ingest and writes data to Databricks via the RESET API. The Databricks connector supports source, destination, and storage capabilities in Toric.

Related articles

https://www.toric.com/support/configure-databricks-connector

https://www.toric.com/blog/torics-data-storage-capabilities-explained

Questions?

We're very happy to help answer any questions you may have. Contact support here or send us an email at support@toric.com.